The origins of web performance in SEO

Back in April 2010 Google announced the inclusion of Site Speed as a new signal in their search ranking algorithms. This new signal was introduced to reflect how quickly a website could respond to web requests. At the time Google stated that it had a low relevance as a ranking factor but since then site speed and web performance have begun to play a much more important role as a differentiation not only in improving user experience but also as a important factor for successful search engine optimization (SEO).

Since 2010, numerous enhancements and announcements have strengthened Google`s position on the importance of web performance and how it can impact on SEO signals. In this article we look at some aspects of how web performance can contribute to enhancing SEO.

Mobile first indexing

One of the most important changes in search over recent years has been the evolution of mobile-first indexing. Both Google and Bing now lead with mobile-first indexing for web pages as a recognition that most searches now occur on mobile devices. Ultimately this means that if you deliver a weak user experience from your website on a mobile device, then your search ranking will reflect this, primarily through lower organic traffic.

The mobile first crawler and indexing processes look at many different aspects of your web pages but for mobile first, being able to render fast on a mobile device is effectively mandatory.

Current best practice considers this to be within 3 seconds, but a 1 second rendering of the viewport on a mobile device is where excellence in user experience can be achieved.

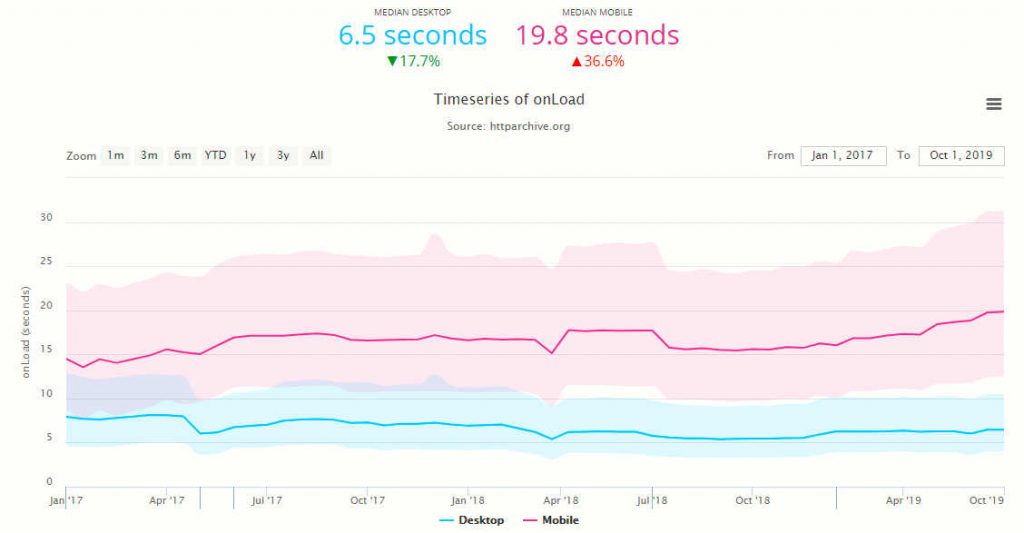

HTTPArchive provides invaluable insight into how far web authors are from meeting these objectives. Although there are many metrics we could look at to show this, figure 1 shows data from January 2017 to October 2019 that the median mobile web page loads in 19.8s website and even the median desktop web page takes 6.5s. Unfortunately, on mobiles these timings are rising.

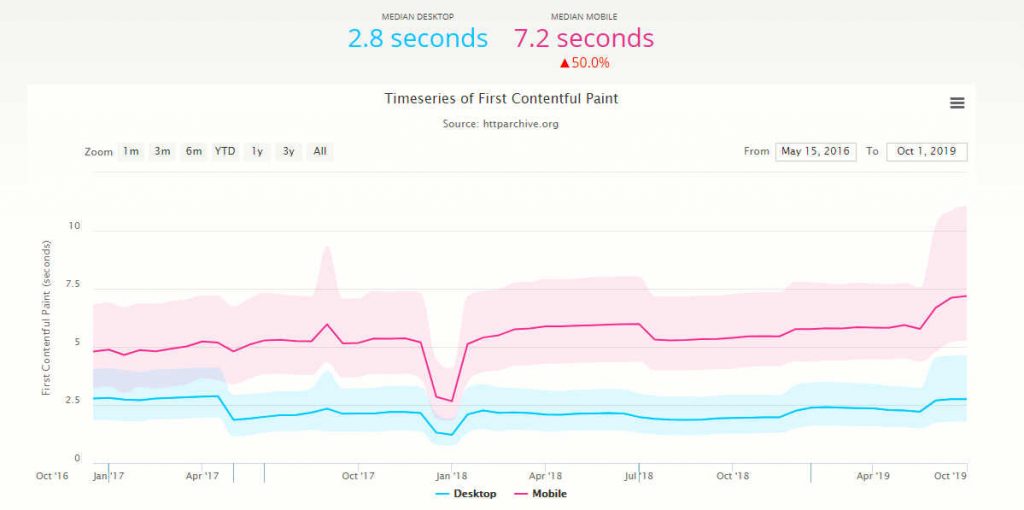

Figure 2 exacerbates how slowly websites deliver content to the viewport. Google defines First Contentful Paint (FCP) as the time from navigation to the time when the browser renders the first bit of content from the DOM. However, this is only the first byte, the whole of the viewport must still be rendered so on a mobile device the median time to completely render the viewport is greater than 7.2s. While much has been said to promote a performance culture over the last decade, historically performance has not been, and is still not, being given sufficient priority.

Although Google still insists that delivering relevant content is the most important criteria to good search rankings, focusing on how fast your web pages deliver quality content can deliver positive signals for improving search rankings.

AMP (Accelerated Mobile Pages)

In response to the proprietary services, Instant Articles on Facebook and Apple News, Google announced their open source Accelerated Mobile Pages (AMP) initiative. The concept of AMP is to enable the creation of fast loading web pages specifically for mobile devices. Since its inception, the technology has been highly successful with billions of web pages published.

By customizing the capabilities of HTML, CSS and JavaScript an AMP web page is limited in the way it is built and what it can do. However, its benefits come from being delivered fast to the requesting user on a mobile device, effectively as as cached static page on an AMP-specific CDN. Consequently, AMP web pages score well for SEO site speed ranking signals. Although targeted for mobile devices, AMP web pages can also load on desktop devices.

However, AMP is not for everyone. The framework technology is still being developed and some features are marked experimental making deployment of business-critical features on production websites difficult to achieve. Refer to amp.dev for a fuller understanding of AMP, how it works and how to build AMP-base web pages

PPC (Pay Per Click)

If your SEO strategy includes an element of PPC, then having slow web pages are probably costing you money on every advertising auction you bid in. If you use Google Ads, then to ensure you have the best chance of winning an Ad auction and at the cheapest price, it is important to maintain a good quality Ad Rank and Quality Score in each auction.

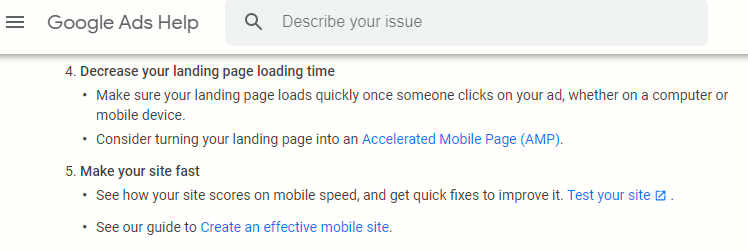

Landing page experience is amongst the many components of both Ad Rank and Quality Score and Google gives clear advice on what make for a good landing page.

Figure 3 shows the relevant web performance guidelines from Google Adwords guidance on landing page experience. It clearly states how important web performance is to the landing page experience, Ad Rank and with it your ability to be more competitive in Ad auctions.

In the worst cases if a user leaves your website due to slow loading landing pages, it signals poor landing page experience and may result in your Ad rank being negatively impacted. Consequently, a focus on ensuring fast page load speeds can boost your return on PPC spend. Refer to Understanding landing page experience for the complete guidance.

Indexing pages and crawl budget

Perhaps not naturally a web performance issue but if you are responsible for maximizing server utilization, especially on busy server configurations, then optimizing the Crawl budget assigned by Google, Bing and other web crawlers is important.

A crawl budget is the amount of resource assigned to a website to crawl its web pages and then index, or de-index them. The crawl budget is important as crawlers split their time across many websites they are only able to crawl small portions of your website on each visit so it is used to set priorities.

Web performance is important in this process as the quicker each web page is crawled, the more web pages that can be crawled in the available crawl budget. This leads to your web pages being indexed or de-indexed quicker.

Web performance is also impacted by a phenomenon known as index bloat. This is where a high number of low value or non-existent web pages are indexed by search engines. These web pages will be loaded if clicked on as a response to a search and also by crawlers and will place an unnecessary load on your servers as they will generate an HTTP 404 response. Eventually crawler processes will de-index the URL and stop crawling your website for such pages.

To maximise crawl budget and reduce server load these URLs should be identified with an HTTP 410 issued instead of HTTP 404.

Unlike the default 404 Page Not Found response, the 410 Gone response signifies that you have recognized this web page no longer exists and you want it removed from the crawl process. Consequently, the crawler acts on this information.

This problem is especially prevalent in large web page turnover environments, such as in ecommerce retail where SKUs that are no longer valid or expired are still crawled. In these types of environments thousands of unnecessary and costly crawler activities occur unchecked which may have an adverse effect on server capability, especially at busy times.

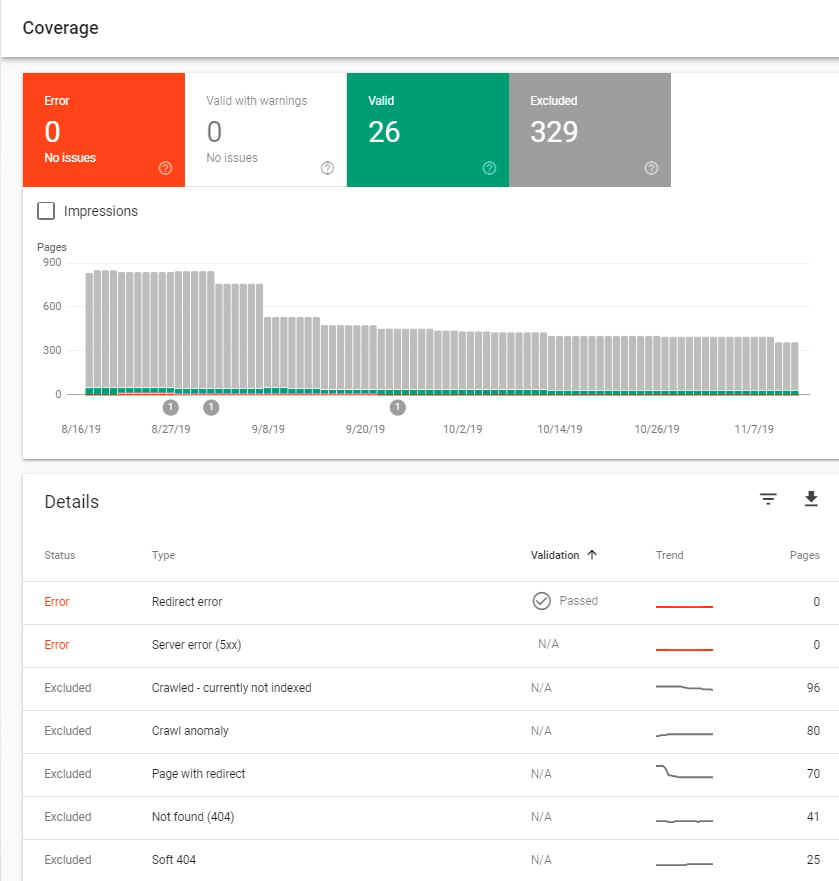

There are several software tools that can help with identifying these types of URLs. Both Bing`s Webmaster Tools and Google`s Search Console Coverage report ( figure 4) and can help identify persistent HTTP 404 and other web pages in error.

Rendering for indexing

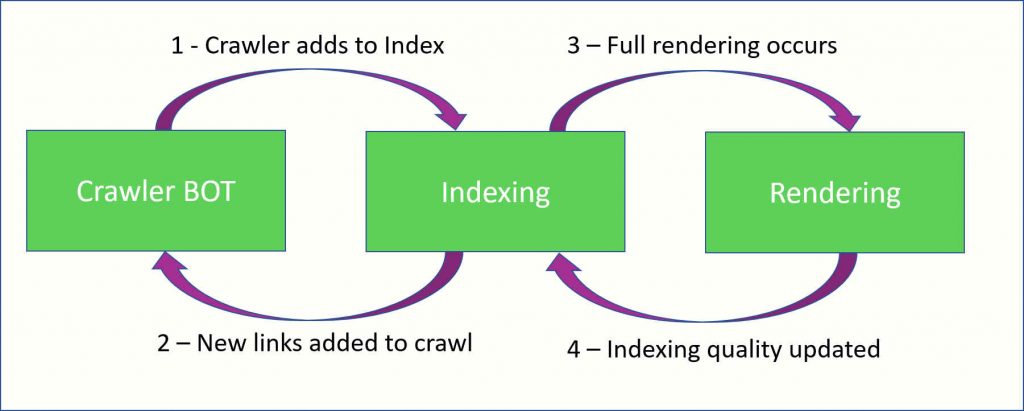

Crawler bots must perform their task quickly and move on as they traverse billions of web pages. To get web pages indexed fast they are not able to wait around while the resources on your web page load and the page renders, so the whole search indexing process is split into three main functions, crawl, indexing and rendering.

Bots crawl websites and send the data they collect to the indexing function. The parsed HTML stream is either added or updated to the search index with the basic information that has been collected. Key web performance information, such as data start time, size of HTML stream, is it compressed, HTTP response code, is available at this time along with the semantic structure of the page.

This approach has the advantage of quickly adding newly identified web pages to the search index without having to fully render, or validate the effect of JavaScript that may reside on the web page.

A further task of the indexing process is to identify new links that have been found from the HTML stream and then initiate requests back to the crawler to schedule appropriate crawls of the new URLs.

The indexer then schedules a full render of the web page. This second bot completes a full render of the web page and also executes and evaluates any JavaScript on the web page.

Full rendering of web pages is very resource intensive so the second crawl occurs when resources are available, sometimes weeks or months later. On completion though the index is updated with the information gathered. From a web performance perspective, full rendering and JavaScript processing exposes a fuller spectrum of web performance metrics that are then available to be stored in the index.

Consequently, web performance metrics and criteria are available to be applied as signals in search ranking calculations and processes.

This non-synchronous approach has enabled web crawlers to incorporate more complex content web pages that utilize JavaScript in their rendering processes. Both main web crawlers, Bing and Google, provide `Dynamic Rendering` facilities aimed at reducing the time delays between the first and second crawl of a web page.

Dont forget malicious and malware bots

Your valuable server resources are also visited by malicious bots that generally provide little or no value to your own domain. These type of bots generally do not observe the rules you may define in robots.txt or try and control through meta tag definitions.

They will consume valuable server resources as they crawl your web pages. Some of the types of activities they conduct include, scraping, email harvesting, spamming hacking or other destructive action.

Many vendors provide comprehensive ways to stop bad bots and many good quality CDNs are able to provide effective and efficient protection. However, a simple DIY method is to track bots that avoid robots and meta instructions and as long as you do not inadvertently block positive acting crawler bots, deny them access using .htaccess.

Being Mobile Friendly

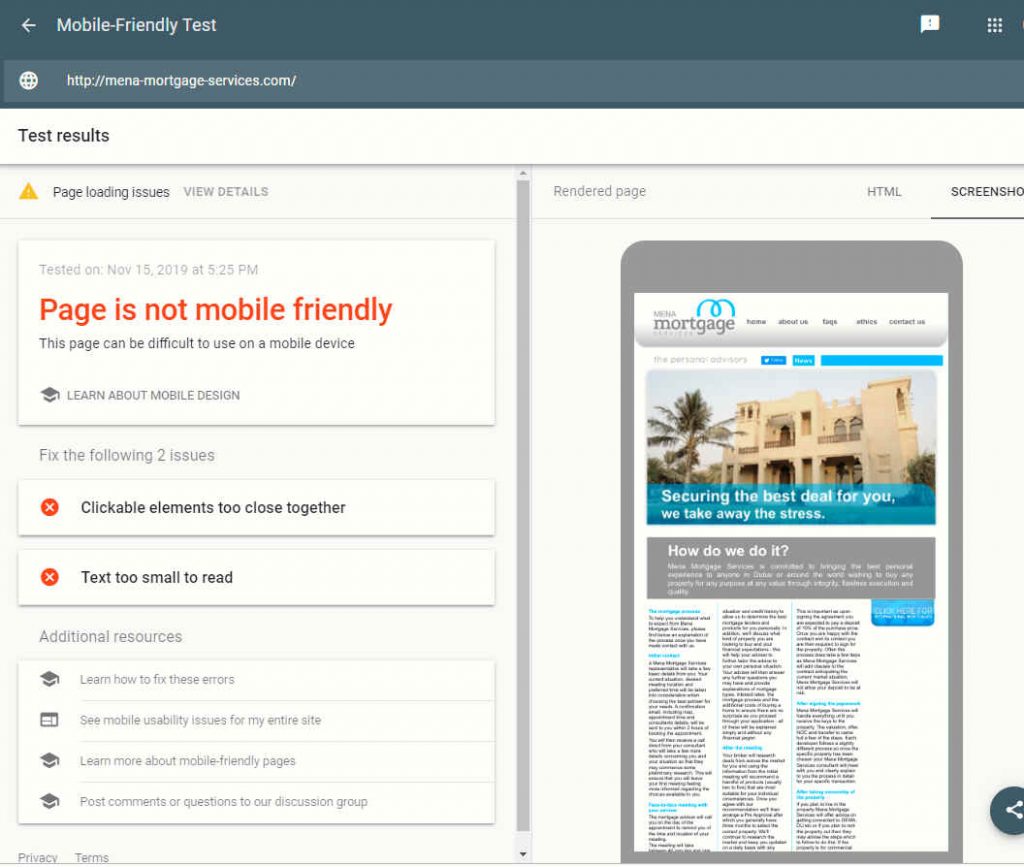

Bots see web pages differently to humans therefore it is important to ensure that bots determine that each web page is mobile friendly. It is not necessary to check every single web page but each template should be tested on several web pages to ensure that a crawler bot sees your pages as mobile friendly.

Several vendors provide mobile-friendly test facilities that will parse and load your web page and confirm or reject your web page as mobile friendly.

Google announced the sunsetting their mobile friendly test options in April 2023. Ensuring web pages are mobile-friendly is an important aspect of SEO, so this move is somewhat counter-intuitive. Google now recommend that you use their more complex Lighthouse tools to observe mobile-friendliness.

The Bing mobile friendly test option can be found at https://www.bing.com/webmaster/tools/mobile-friendliness.

Simpler and easy-to-use options exist on the web. One such tool can be found at https://www.experte.com/mobile-friendly. The tool enables the review multiple web pages concurrently with clear messaging of what inhibitors exist to mobile-friendliness.

On failure, the tool provides a snapshot of the viewport and insights into why the web page is not mobile friendly. The HTML is also made accessible to aid further analysis.

It is recommended that both tools are used as the bots test and index web pages differently.

The web performance advantage

Traditionally web performance initiatives and governance has had difficulty in gaining sufficient traction and priority when scarce development resources are required for other activities considered a higher business priority.

However, with the advent of mobile-first indexing and the ability of web crawlers to render web pages and observe the effect of JavaScript on a web page, addressing web performance issues can deliver faster web pages, improve search capability, reduce server load, potentially reduce the cost of PPC campaigns and contribute to winning more auctions through improved end user experience. As such web performance should now be an important thread of any SEO activity .

Consequently, SEO professionals should engage with web performance specialists to improve conversion rates, page views and search engine rankings.