2020 is not only a new year but also the start of a new decade so in this article we take a look at how some industry-accepted web performance best practices have been impacted by the technology advances of the past ten years.

In the beginning

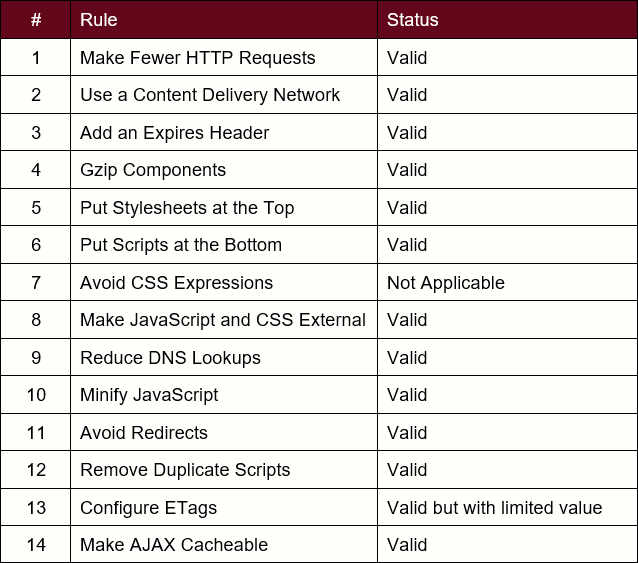

For many, the origins of web performance began with Steve Souders when he wrote his books High Performance Web Sites and Even Faster Web sites over 10 years ago.

In his work he developed 14 rules, listed below, that when applied should speed up the loading of web pages and the performance of websites.

- Rule 1 – Make Fewer HTTP Requests

- Rule 2 – Use a Content Delivery Network

- Rule 3 – Add an Expires Header

- Rule 4 – Gzip Components

- Rule 5 – Put Stylesheets at the Top

- Rule 6 – Put Scripts at the Bottom

- Rule 7 – Avoid CSS Expressions

- Rule 8 – Make JavaScript and CSS External

- Rule 9 – Reduce DNS Lookups

- Rule 10 – Minify JavaScript

- Rule 11 – Avoid Redirects

- Rule 12 – Remove Duplicate Scripts

- Rule 13 – Configure ETags

- Rule 14 – Make AJAX Cacheable

The rules apply to the many different aspects of building a web page but in principle can be considered in 3 main categories.

- Reduce resource usage

- Enable caching

- Eliminate blocking

Technology has significantly progressed over the past 10 years and it is in this context that this article reviews how relevant each rule is today.

Rule 1 – Making Fewer HTTP Requests

Quite correctly, the concept of this rule is that if the web page does not require a resource, then it should not be loaded. In 2010 loading a request would use HTTP/1.1 or an earlier protocol. The protocol had many limitations, such as that each request required a separate connection to enable the request from the client to the server and the transfer of the resource from server to client. This meant that multiple TCP connections could exist to enable parallelism but the use of TCP in this way was not optimized.

The number of parallel connections to a domain from a client that a browser would support was also a limitation. This limit varied by browser and acted as a constraint on the number of resources that could be downloaded concurrently from a domain.

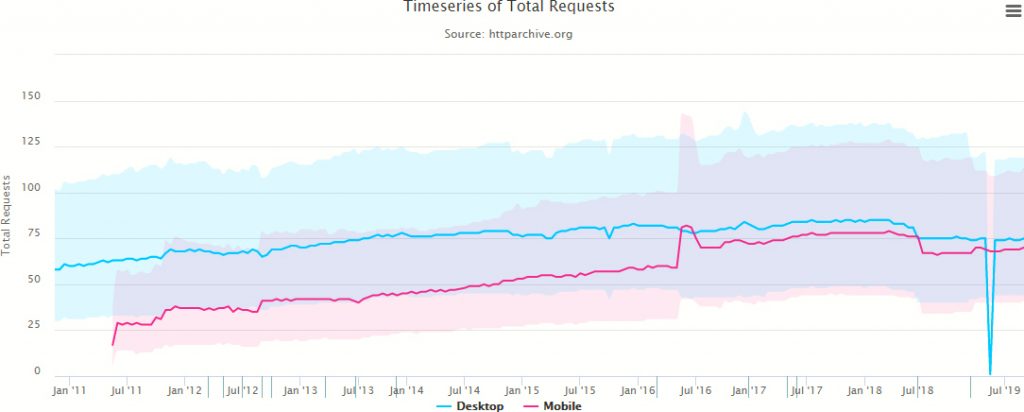

Sourced from HTTPArchive, figure 1 shows how the total number of requests, at the median (p50) has steadily increased over the past decade for both mobile and desktop devices. The median is shown as the solid line with the p10 (10th percentile) to p90 (90th percentile) range shown by the shaded areas.

In recent years there is almost a convergence of these metrics which could infer that there is little difference between the resources served irrespective of device.

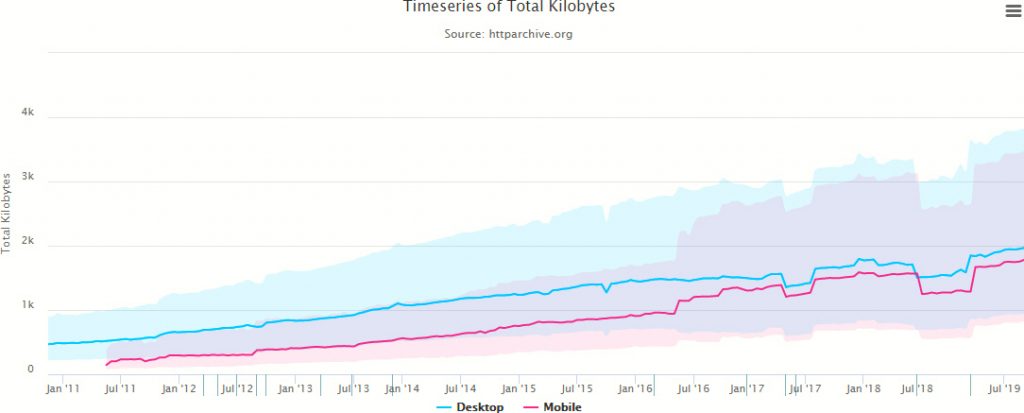

Figure 2 shows how the number of kilobytes loaded per page has continually increased over the decade with the page weight at p50 to increase by 164% for desktop and over 200% for mobile web pages.

The causes for these increases are many, such as multi-media, frameworks and third-party software, but on the above evidence, to-date, very few organisations and web authors appear to adhere to this tenet of web performance.

Enter HTTP/2

Through the SPDY programme, Google created an experimental protocol that the IETF used to derive the basis of HTTP/2, with the full specification published mid-decade in 2015.

This new protocol looked to address many of the known limitations of HTTP/1.1 including those outlined above.

HTTP/2 optimizes TCP connections by establishing only a single connection between the client and server and all resource requests use the same connection. This approach frees the browser from any concurrent resource request limitations. The result of these changes had many beneficial effects.

HTTP/2 has been a success as since its adoption. HTTPArchive considers its adoption at the end of 2019 as almost 60% of all resource requests. Over the same period the average number of TCP connections per web page has halved. This is impressive and when taking into account the growth in page weight as shown in figure 2, indicates how more efficient HTTP/2 is over HTTP/1.

In conclusion, the objective of making fewer HTTP requests has been offset by the introduction of a more efficient technology that has supported the underlying growth of resources requested and the increase in page weight that has accompanied this.

However, as the end users of websites demand even faster delivery of web pages, attention to the continual increase in the number of HTTP requests would need reconsideration.

Rule 2 – Use a Content Delivery Network

When this rule was devised a content delivery network (CDN) was a relatively new concept in an early adoption phase. Today, CDNs are fairly pervasive and well used by web authors and architects as key infrastructure components.

Many CDN providers began by providing an internet-level security service to defend against evolving security threats. However, a CDN can provide highly scalable services that can deliver high performance even when your website is under increasing load.

Through caching technologies a CDN effectively extends some aspects of your web server so that it appears closer to the end users accessing your web pages. The ability to cache in this way, and at many different locations, can eliminate considerable latent delay (latency) which in turn leads to loading web pages faster and with it improved user experience.

As complex components of infrastructure CDNs and with edge computing are evolving fast. Each provider delivers content in different ways or may specialize in certain types of data transfer, such as images. Consequently, it is important to understand the strengths of the CDNs that are available to support your digital services.

The take up of CDNs has accelerated across the decade and they are now commonly used by organisations, sometimes unknowingly, consequently, this rule is both valid but can also be considered as adopted.

Rule 3 – Add an Expires Header

Expires headers are one of several cache control directives that are designed to speed up the loading of a web page. It works by establishing an expiration date for the resource that the client browser can check to determine if the resource can be used or if a new version must be loaded from the server.

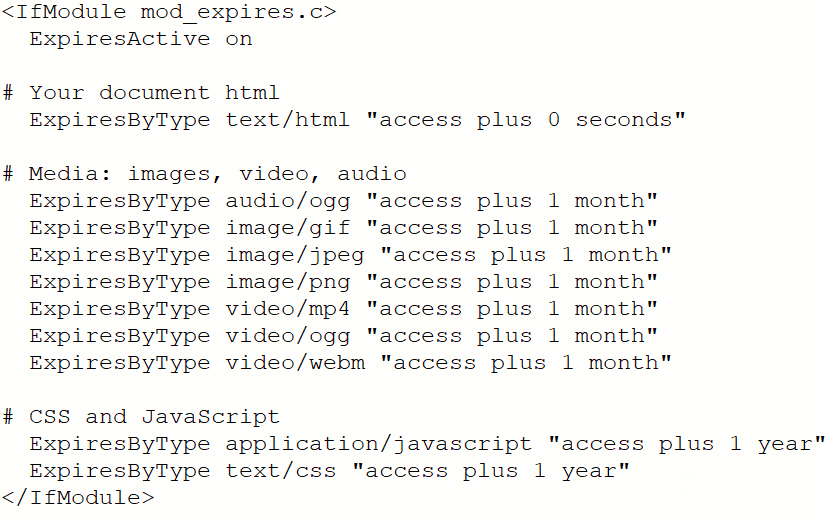

The expiration date is generally a date in the future and should be aligned to a website caching policy that optimizes the use of both network and server resources. For example:

Expires: Wed, 30 Dec 2020 09:15:17 GMT

There are several ways to set caching policy, such as, programmatically, on a server or on a CDN edge server. Figure 3 shows an example of how to code the directive on an Apache server.

The HTML5 boilerplate project has documented a sample set of expires headers for wide range of resources that provides a good starting point for establishing a caching strategy.

An alternative to Expires is the max-age directive which sets an amount of time in seconds the resource is valid before of expiry as opposed to an absolute date set by Expires.

In general web site publishers understand caching strategy and apply caching directives that are aimed at improving performance. However, experience shows that many opportunities to apply caching are missed and potentially insufficient attention to ensuring credible expiration dates are applied and maintained. Consequently, continuing to pay attention to this rule, or similar caching techniques, will deliver performance rewards, while reducing the load on both server and network resources.

Rule 4 – GZIP Components

If compression is not configured, a server may send a file in response to a client request uncompressed and in its native file format, which is generally textual.

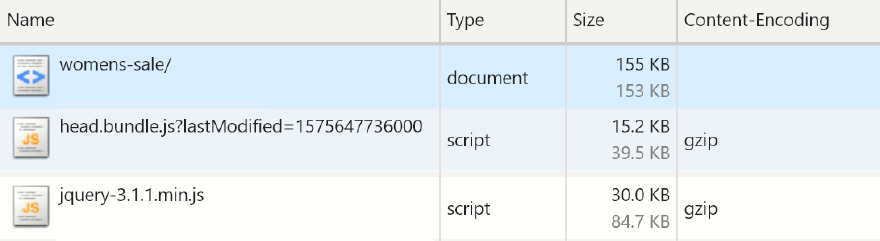

HTML, CSS and JavaScript are major resource contributors to building a web page and uncompressed their combined size can quickly add up. For example, figure 4 shows 3 resources from a current live web page on an eCommerce website and shows how effective compression can be.

The Content-Encoding field shows that the main HTML stream is not compressed so the server sends down 155 KB of uncompressed data. This unnecessarily consumes network resources and also delays the ability of the browser to parse and process the HTML stream.

Both other resources are JavaScript files and as the Content-Encoding field indicates Gzip is used as the compression method with the result of the compression indicated by the two numbers in the Size column.

The top number is the compressed size and the bottom number is the original uncompressed size. The compressed size also includes the response meta data associated with the file and is why the HTML (document) top size value is larger than the bottom one.

Servers will compress a file if the client browser indicates, through the Accepts-Encoding header that it can receive compressed data. Three main compression methods are in use today, Gzip, Deflate and Brotli. Brotli generally provides the greatest level of compression but the trade-off for this is increased CPU processing time.

The benefits to web performance of this rule are considerable yet as the example shows, this simple to apply feature is not always exploited, consequently, there is considerable merit to ensuring this rule is applied to web site implementation.

Rule 5 – Put Stylesheets at the Top

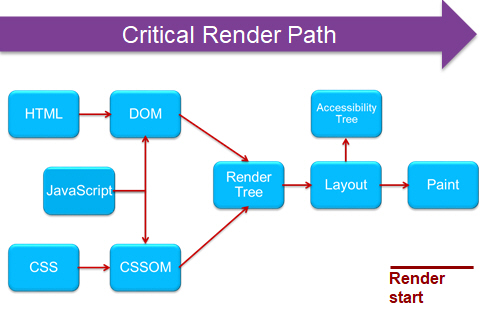

Adhering to the critical render path is fundamental to achieving good web performance as it is a process that all browsers use to build and render web pages.

Figure 5 represents the data flow in the critical render path. Starting with the HTML stream there are 2 main threads, the Document Object Model (DOM) and the Cascading Style Sheet Object Model (CSSOM), that the browser looks to prioritize as it builds the web page.

The CSSOM is used to tokenise the CSS styles so they can be applied against the DOM, which itself is built from tokenising the HTML stream. Once the CSSOM is built and enough of the DOM is available, the browser will begin to build the Render Tree which combines the tokens into a physical structure that the browser can then layout and paint, or composite, to the viewport or the non-visible area of the web page.

These are the primary processes of the browser and by default do not include JavaScript. However, if uncontrolled the loading and parsing of JavaScript can delay these time-critical processes.

Rule 5 was originally defined to prioritize CSS as the first resource the browser came across and would therefore request it to be loaded and processed first. A decade ago, this was a sensible approach as browsers did little or no prioritization of resource loading. Today, browsers are much smarter and have implemented a pre-load processing thread which scans the HTML stream and then prioritizes resource loading based on MIME type, placement in the HTML stream and any HTML-defined resource hints.

This means that web authors can now change browser default priorities using HTML features like the preload resource hint to prioritize important resources such as a hero image.

Rule 5 is still valid but it should be considered alongside a number of new best practices have evolved that apply to the placement and management of CSS in the HTML stream, such as critical CSS and in-lining CSS which will be covered in a later article.

Rule 6 – Put Scripts at the Bottom

Like rule 5, rule 6 addresses a historical need for prioritizing the critical render path by preventing render-blocking JavaScript from delaying building the CSSOM, DOM and Render Tree. This requirement still exists and as such this rule still has validity.

The pre-loading processing thread discussed in the previous rule assigns a high priority to JavaScript when it is defined in the HTML head section. Applying this rule it will demote the loading of JavaScript to a low priority which allows all resources defined ahead of it to be loaded first.

Like CSS, web authors can now change browser default priorities using HTML features for managing JavaScript resources such as Async and Defer. These features work in different ways on the scheduling the loading, parsing and processing of JavaScript resources but when used correctly they can be extremely effective for web performance.

Consequently, as with Rule 5, this rule still has merit but technical advances have extended the options available to web authors with managing JavaScript and although this rule applies, there are many other aspects to consider when utilizing JavaScript on a web page.

Rule 7 – Avoid CSS Expressions

CSS Expressions are a legacy technology that were implemented in Internet Explorer 5 and then due to considerable performance issues were deprecated in Internet Explorer 8.

Their purpose was to provide a way of dynamically changing values of CSS properties, however, several more efficient methods of dynamically modifying CSS now exists.

Consequently, this rule is now no longer valid.

Rule 8 – Make JavaScript and CSS External

Both JavaScript and CSS can be defined on a web page either in-line between HTML <script> and <style> tags or as an external resource that is stored as a file and loaded.

Both definition types have different advantages and dis-advantages. The main advantage of implementing this rule is that the resources can be cached by a cache, such as server, browser or a network cache, making secondary loaded web pages much faster to load all of the resources it needs. CDN caches can also be exploited enabling primary loaded web pages can also benefit.

A major beneficiary of this rule are the secondary web pages assuming a high degree of commonality of the external JavaScript and CSS files across the pages on the website. Consequently, this rule is still highly valid as a best practice for JavaScript and CSS resources. However, as noted in previous sections, there are many newer best practices that apply to both CSS and JavaScript that take advantage of both browser and other technology enhancements over the past decades. Therefore, although the rule applies it must be viewed in conjunction with newer best practices and the application of the correct best practice will be determined by the specific circumstances of the web page.

Rule 9 – Reduce DNS Lookups

As the directory of the internet the Domain Naming System (DNS) is used to provide the location of each required resource and enable the browser to establish a connection with the server that owns the resource. Therefore reducing the number of DNS lookups makes perfect sense as each reference to DNS for a lookup can add tens or even hundreds of milliseconds to each request.

In Rule 1, Making fewer HTTP Requests, we covered how browsers limit the number of concurrent HTTP/1 connections to a server, consequently, a DNS lookup could occur for each resource that resides on different servers. The keep-alive directive helped to limit this by reusing established connections, but web pages requiring many resources on multiple servers are still able to place a high demand on the DNS.

HTTP/2 has made a major impact on this as only a single connection, requiring a single DNS lookup is necessary to an HTTP/2 server. However, although fewer connections are being made, care should be taken over what DNS service providers your website needs to connect with as this can have an impact on performance.

This is due to the variance in performance from DNS service providers and using statistics provided by DNSPerf, figure 6 provides a scorecard of current DNS service providers and the average response time they give for each DNS lookup.

The chart shows raw performance which is defined as the speed when querying the nameserver directly. DNSPerf update their statistics hourly and test from over 200 locations worldwide.

As these are ongoing averages, the considerable difference between the best and worst performers is relevant when looking for faster web performance as any delay in DNS lookup directly impacts on time to first byte.

This rule still applies but as with other rules its scope should be widened to include speed and availability (also measured by DNSPerf) of DNS provider. In addition, DNS technologies have evolved considerably and with the wider acceptance of IPv6 opportunities exist to address bot of these aspects of DNS management.

Rule 10 – Minify JavaScript

An underlying theme of all of these rules is to minimize the amount of data that passes between the server(s) and browser. This rule directly states this as its objective. Minification of JavaScript removes all the unnecessary characters from the raw code such as removal of blanks, comments, tabs and other non-necessary characters.

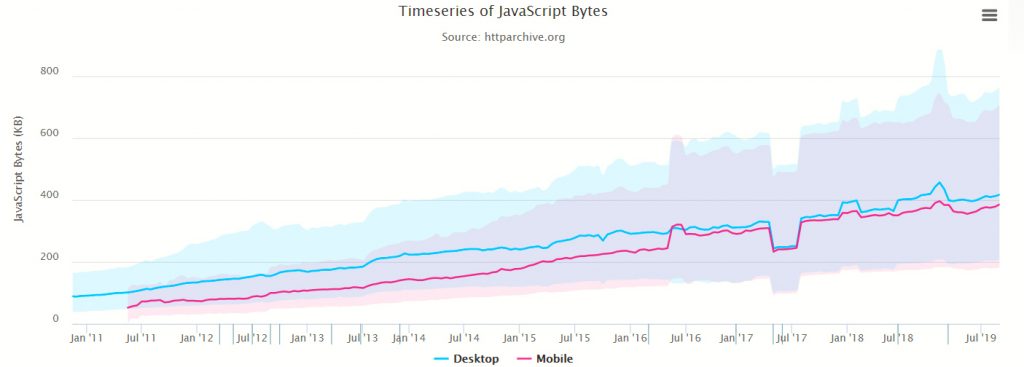

This process can lead to a significant reduction in the size of the JavaScript code. This is a necessary benefit especially as the amount of JavaScript that is loaded on each web page increases year on year.

The HTTPArchive chart in figure 7 shows how the use of JavaScript has dramatically increased over the past decade, consequently, this rule is quite visionary as it complements the Gzip rule by further reducing the amount of JavaScript transferred across the network.

The main drivers of this growth are the adoption of JavaScript-based framework technologies that have evolved over the decade and the persistent use of third party tools that all employ JavaScript libraries to deliver their services.

As JavaScript is not directly on the critical render path but interacts with its processes, both today and a decade ago its minification is a necessary web performance enhancement. However, this rule could be extended to include the minification of both HTML and CSS resources to gain the same type of compression-based benefits. Implementing this can be achieved either in the production delivery phase (recommended), in a CMS or by a CDN as some now provide this functionality.

Rule 11 – Avoid Redirects

As a redirect injects one or more interim links into the route taken to the target URI , the objective of this rule is to minimize the route taken to the target web page or necessary web page resources. Redirects not only take time to complete, they also consume network and CPU resources that could be used to benefit other tasks.

Original HTTP specifications defined 4 redirect options, the most common being 301 Moved and 302 Found. However, over time the specification has been extended to include a wider range of redirect options that web authors must ensure their websites can address. These current IETF defined 3xx status range is:

- HTTP 300 Multiple Choices

- HTTP 301 Moved Permanently

- HTTP 302 Found

- HTTP 303 See Other

- HTTP 304 Not Modified

- HTTP 305 Use Proxy

- HTTP 306 Switch Proxy

- HTTP 307 Temporary Redirect

- HTTP 308 Permanent Redirect

- HTTP 308 Resume Incomplete

Redirects are costly but they can also result in a never-ending loop of redirects. Browsers protect against this and fail web pages caught in this trap. However, most re directions have the potential to delay the building of a web page and should therefore be avoided.

Redirection also has a critical part to play in SEO activities making the simple instruction of this rule even more valid today.

Rule 12 – Remove Duplicate Scripts

Ten years on there are still many websites that include duplicate scripts onto their web pages. Sometimes this is not always in their direct control as third party software may include duplicates of scripts already loaded and, in this case, governance should be put in place with the third party to prevent this.

Experience has shown that duplicate scripts, or different versions of scripts, especially frameworks, such as jQuery, can be loaded more than once on a page load.

This generates unnecessary workload as the resources must be downloaded, and then the code must be parsed and executed. Inevitably, much of the code will be unused.

Although rule 12 applies to JavaScript, it should also be applied to CSS, images and fonts that get requested as duplicates or surplus to requirement.

Modern release management should prevent this problem from happening, but it unfortunately still occurs requiring this rule to still be necessary.

Rule 13 – Configure ETags

An entity tag (ETag) is a method by which a server can create a checksum for a resource, the entity, that is unique to that particular version of the resource. The ETag is then sent with the resource in an HTTP header for future use.

If a resource has an Expires header set, once the expiry date occurs, using the if-then-else directive the ETag is sent back to the original server to validate it as the current version. If the resource is still valid, the ETag will match and an HTTP 304 Not Modified, will be returned. This will negate the full reload of the resource from the server across the network to the client. Any update in the original resource will result in a different checksum being generated in the ETag on the server and the resource will be reloaded.

In principle, this method works well for single server environments but where load balancers or multiple-server and dynamic serving environments exist the value of this approach becomes limited. This is because the generation of the ETag generally includes server specific attributes, (the inode), and for a validation the ETag may be generated on a different server, so the ETags will not match.

ETags are still used in many environments but the Last-Modified directive is a valid alternative and as effective as it validates on the timestamp of the resource and does not have a reliance on the server inode values.

Speeding up access to resources and saving both CPU and network resources is the primary objective of this rule, of which this objective is still valid. However, due to advances in infrastructure complexity using ETags is becoming a redundant approach and consideration should be given to replacing them with Last-Modified caching directives.

Rule 14 – Make AJAX Cacheable

The focus of the previous 13 rules has been on improving the performance of loading a requested web page or one of its resources to a client browser. However, several of the principles discussed in these rules also apply to AJAX calls initiated by the web page or through user interaction with a web page.

One of the most important rules to apply relates to enabling a response to be cached using Add and Expires Header. When an AJAX response rarely changes, this makes it available if it is required later in the current or subsequent session.

However, other rules are relevant including:

- Gzip Components

- Reduce DNS Lookups

- Minify JavaScript

- Avoid Redirects

- Configure ETags

Consequently, this rule is valid for fully HTTP-loaded web pages and is also highly effective in a single page application (SPA) environment to contribute to a high-performance user experience.

At the end of the decade

Taking a fresh look at Steve Souders original web performance rules shows how visionary they were as despite the advances in technology many of them are still valid today.

However, many of the rules, as discussed in the appropriate section, could benefit from review and the inclusion of new concepts to cover how browsers now build web pages and how infrastructure technologies deliver the necessary resources.

The Performance Irony

Over the last decade the development and adoption of standards, such as HTML5, CSS3 and ECMAScript has complemented the evolution of existing and the emergence of new technologies, all of which have had a major influence on how the internet works and is used.

Some of the technologies that have emerged relevant to web performance include:

- Cloud

- HTTP2

- Single Page Applications (SPA)

- JavaScript Frameworks

- Edge computing

- Progressive Web Applications (PWA)

- Accelerated Mobile Pages (AMP)

- 5G

- Web Assembly (WASM)

- HTTP3

Many of these are still evolving or in their early phases of implementation raising the possibility for further performance gains. However, when comparing key performance metrics over the decade, HTTPArchive shows us that, onLoad, the original metric of choice has hardly changed on a desktop and on a mobile over 3 times slower.

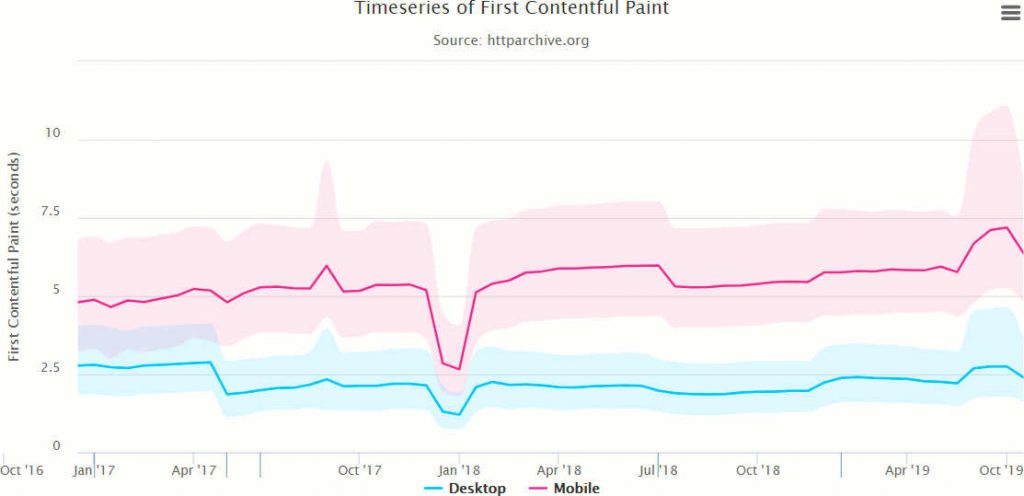

User experience is the current focus metric and render start, or First Contentful Paint (FCP), is aligned to this. HTTPArchive for the past 3 years, figure 8 shows the desktop value is relatively unchanged and the mobile value has been slowly increasing over the same period.

As a final point, there is an unfortunate irony here as the decade has seen considerable investment into performance but overall web performance, and with-it user experience, has not been able to capitalize on this and so can therefore be considered as degraded.

Consequently, as we go into the 2020s considerable work in web performance, processes and practices, is still necessary if we are to exploit the technologies available to improve web performance and also reverse these trends.