Nowadays it is rare for a website or web application to go live without passing pre-determined performance criteria that has been previously defined as necessary by the business. This normally comes down to number of webpages or transactions per second all loaded within a specific download speed. Testing to prove the website against this type of performance criteria is necessary to ensure the end-user experience is as intended.

However as more websites move onto cloud infrastructures the benefits of cloud, such as the dynamic addition of infrastructure through auto-scaling and the setting of predictable performance levels with database transaction units (DTUs – in Microsoft’s Azure ) must also be proven in load testing. This is not only to ensure that these features work as expected but also to ensure that costs are not unnecessarily incurred or business not transacted through their mis-configuration or even under-assignment.

Auto-scaling and Performance Load Testing

As it is a major benefit of cloud environments it is important to get auto-scaling to work correctly as the load on your website fluctuates otherwise you may end up paying for services you are not using. Fortunately, cloud vendors have made the configuration of auto-scaling simple so that the service is quick and easy to use, but when dealing with dynamic loads on a system you need to ensure that the rules will work when you need them most.

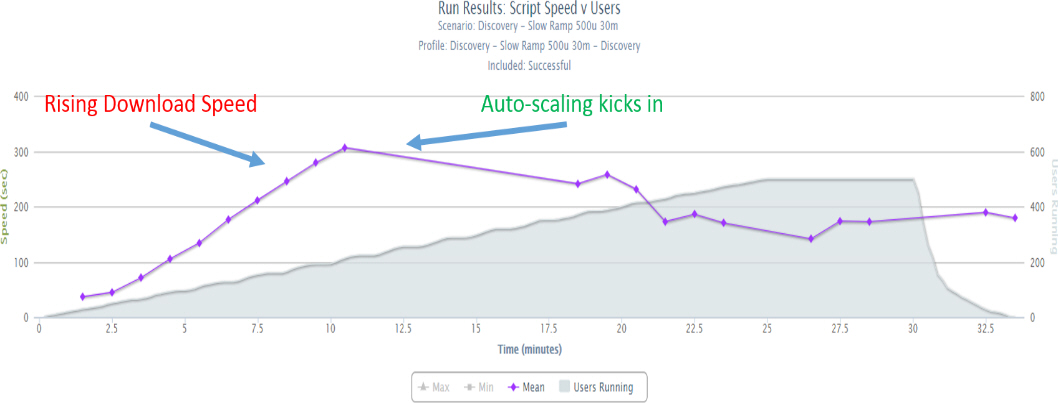

In a recent load test the client began a user journey (UJ) test that would continually increase load up to 500 concurrent users over a 30 minute period. They were confident that their application would perform well but what we found was the way that auto-scaling had been configured, end-user experience would have been extremely poor. In our results chart we can see that after 2.5 minutes of the test, the download speed of the UJ started to increase at an alarming rate.

From our previous benchmark test, the UJ should complete in approximately 47 seconds but in the auto-scaling test as the load went over 50 users the download speed started to climb very quickly. We observed that the CPU was also moving towards 100% at this point, but the planned auto-scaling had not kicked in.

Auto-scaling had been configured to bring more resources to bear from 70% CPU utilisation, but only if CPU was at 70% or greater for 10 minutes, so we had found the reason why more servers had not spun up. This proved to be the case as about 10 minutes after auto-scaling had occurred the download speed started to improve due to the extra resources within the environment .

The client quickly configured a new rule that stated that if CPU is 80% or greater, then just spin up the extra resources. This dynamic change to configuration proved worthy as CPU started to climb again at about 18 minutes. This was sufficient to contain the increasing workload on the CPU resources and start to reduce download speed.

In the graph we can see than the UJ never recovers back to target time of 47 seconds. Further investigation showed that this was due to a secondary problem that had been identified through performance load testing.

Database Transaction Units (DTUs)

Because we could now see the CPU resources had settled to an acceptable level this secondary problem was something new.

The client’s databases are hosted in Microsoft’s Azure where it is possible to procure a specific performance level for your database. This is where Microsoft is committing a certain level of resources for your specific database with the intention of delivering a predictable level of performance. The Azure platform implements this approach through the concept of Database Transaction Units (DTUs) and this enables Microsoft to charge a price that will be dependent upon how performant you want your databases to be, so it is important to get these settings right.

Further analysis showed that the number of DTUs assigned to one of the three databases used in this UJ had reached its maximum. Consequently, the database was unable to work any faster.

Of course, the simple thing to do at this point would be to add ore DTUs, which over time could prove expensive as this could be a never ending cost to the operating budget. However, the client decided it was worth a review of how this specific database was being used in this UJ. Using the knowledge gained of how the application works under load the client was able to make some minor enhancements that resolved this without incurring further infrastructure costs.

It’s Not a Cloudy Day Though

As a mainstream technology Cloud delivers many benefits and advanced infrastructure features that enable consistent performance and the dynamic scaling of services. However, regular and effective performance load testing is essential to maximising cost benefits while ensuring that your website or application will perform for your end-users as expected when most needed.